Hello! Thank you for taking the time to view my blog and (possibly) follow along! I have been spending time preparing for the DEVNET SPAUTO specialist certification and I hope to create some great content thats publically available for those trying to achieve this cert.

I’ve been gathering all the lab materials and automation I’ve created at the following personal repository:

This material will also be included inside the WIKI pages for the repo.

For my DEVNET SPAUTO studies I’ve decided to dedicate quite some time to XTC as I have never used or seen this application in a production Service Provider environment. This topic is part of the Automation and Orchestration (30%) from the SPAUTO blueprint.

4.6 Implement XR traffic controller

(including topology information transfer to XTC)

Let’s gets some general terms out of the way before we continue with our Lab demo.

PCEP

PCEP is a hierarchical protocol, running over TCP port 4189. PCEP has two main functions:

- Path Computation Element (PCE) – A software controller acting as the server within the PCEP protocol. The PCE has a global view of the entire topology of a network and is able to centralize Path Computation and apply Traffic-Engineering policies. PCE can be clustered for redundancy.

- Path Computation Client (PCC) – The clients are network devices that can act as IP/MPLS Head-END Label Edge Routers (LER). The PCC devices receive operation instructions when signaling LSPs across a network (or multi-domain network) from the PCE. Optionally, local parameters can be used within the construct of the LSP when signaling alongside the information received from the PCE.

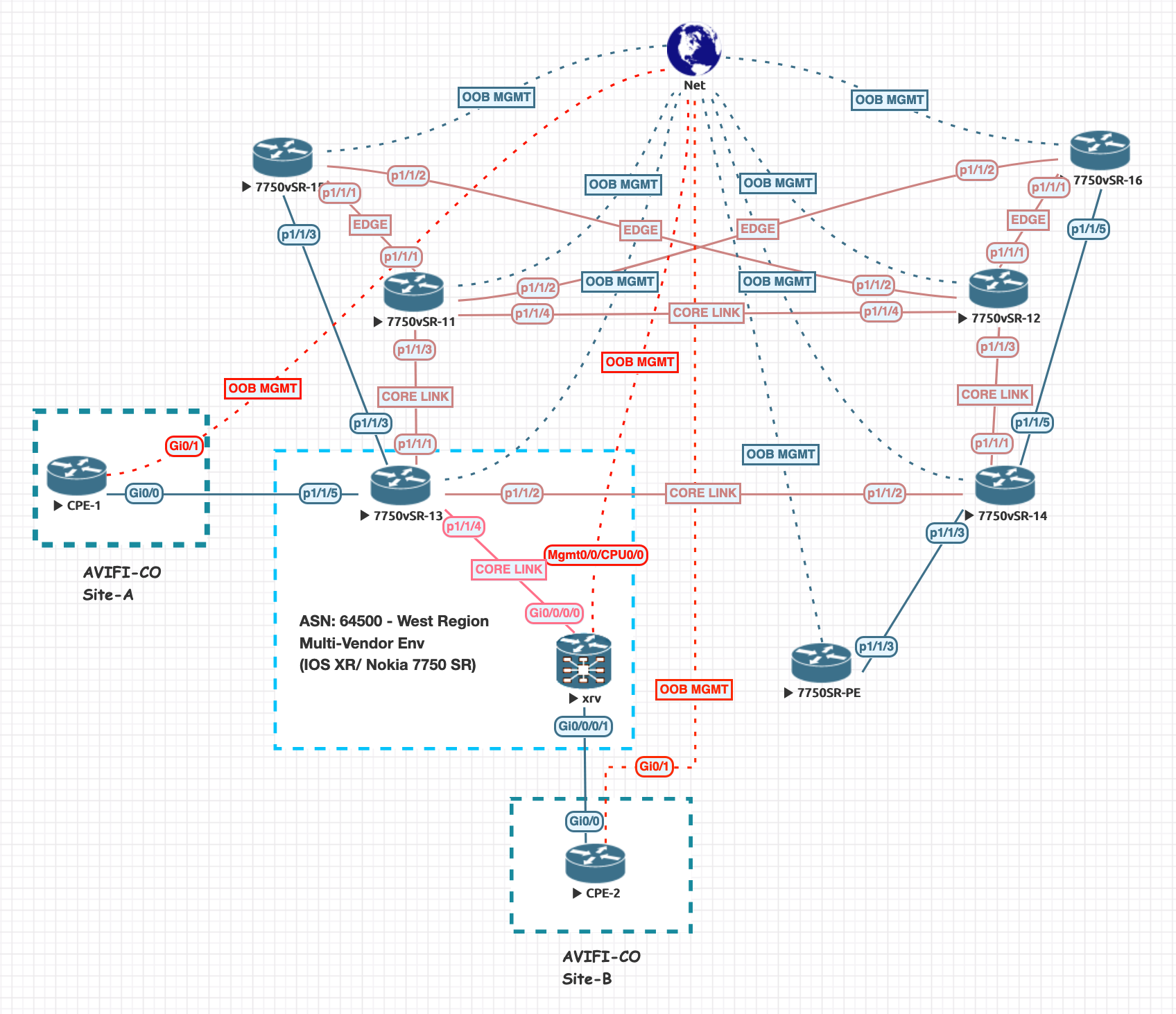

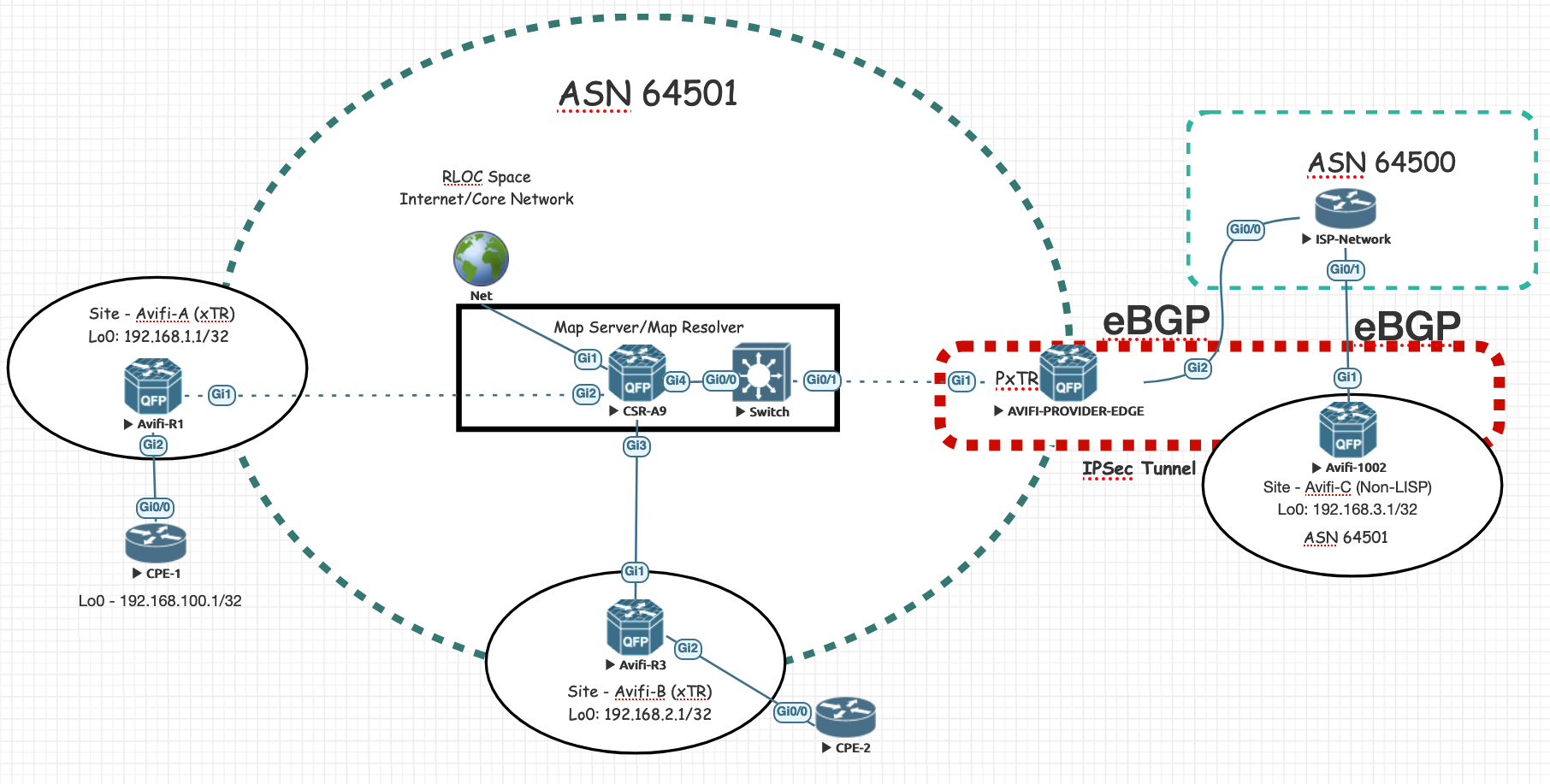

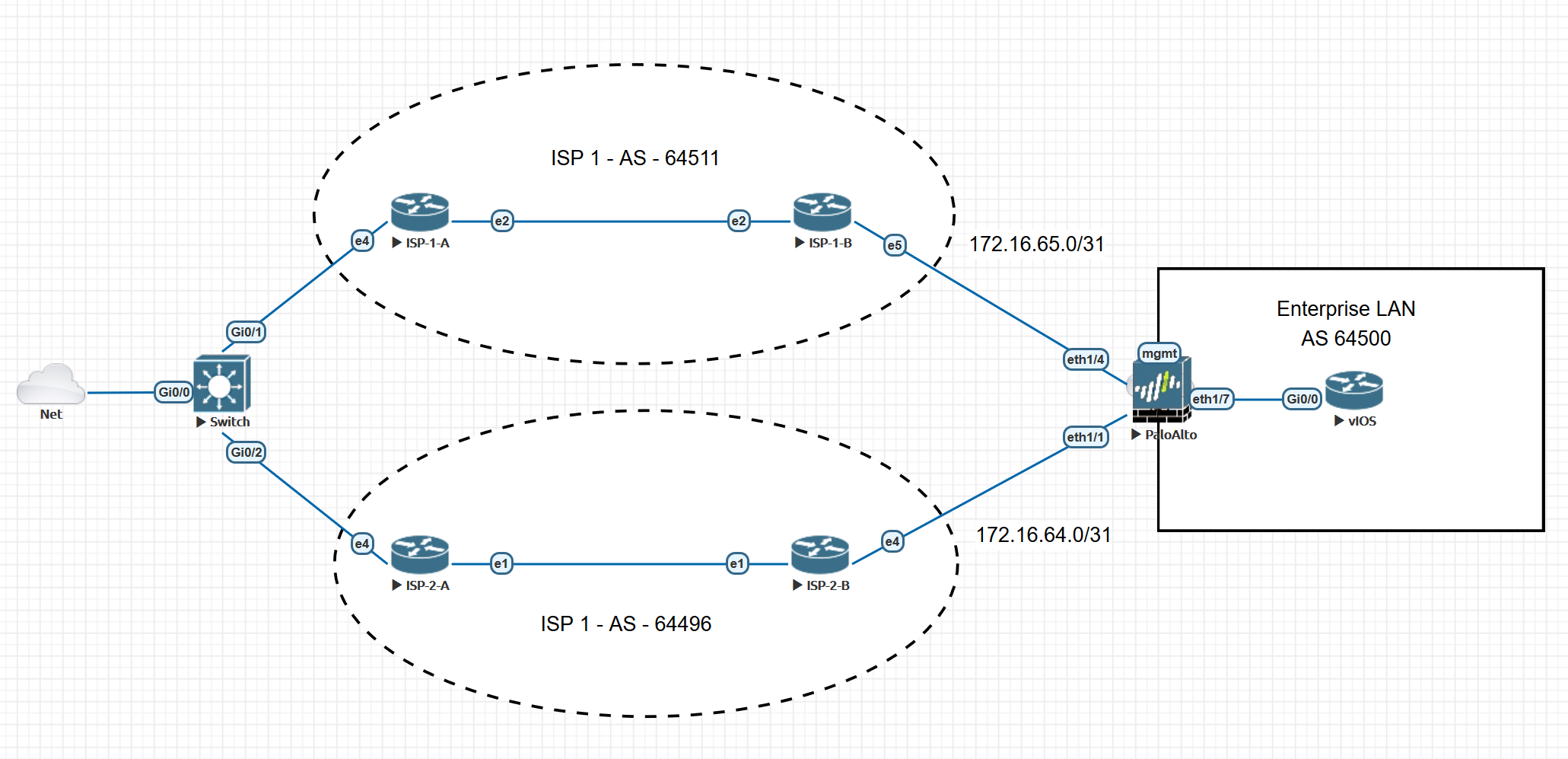

There are several implementations of PCE solutions, like Juniper’s SDN North Star and Cisco Open SDN controllers (OpenDayLight). However, in our lab topology we will be using a Cisco IOSXRv as the XTC (PCE) server. All other devices that inquiry information from the XTC device (PCE) will be the acting PCC clients. We have two devices that are acting as Route-Reflectors in our lab, we will also enlist them as a synchronized pair of XR Traffic Controllers.

BGP Link-State

BGP-LS is a newer extended address-family for BGP. (AFI=16388, SAFI=71) – This family distributes network link-state information to the northbound controller (XTC/PCE or JunOS Northstar, OpendayLight, etc) This AFI provides BGP with Traffic-Engineering capabilities by mapping the network out, regardless of reachability or separated ASN/Domains. This is because the networks IGP (OSPF or ISIS) link-state database is exported (distributed) through BGP.

Okay, lets head over to the demo! Our goal in our demonstration will outline an LSP being deployed across our IP/MPLS Network with Segment-Routing as the underlying Transport. Also, an LSP will be deployed across a multi-domain (ISIS-CORE & ISIS-100) network. This specific multi-domain LSP is an incredible feature! Our PE1 LSP will have a far-end destination of PE100 which resides in a different domain and PE <> PE100 do not have network reachability to each other.

XTC

XR Traffic Controller will be an IOSXRv device in our network. This device will have full PCE Server capabilities.

PRE-REQs:

There will be some basic information pertaining to Segment – Routing, but we will not go into great detail on how to configure SR in our network.

- Ensure your BGP sessions to the predetermined IOSXR device that will become the XTC Controller have BGP-LS enabled.

- Ensure link-state information is being distributed from your IGP. In our case, we are using IS-IS. From the topology map, we are redistributing link-state information from our Provider Edge devices PE4 and PE2 as they are the edge devices between this Multi-Domain (ISIS) topology. This information is all propagated back to the Route-Reflector which is going to be our XTC/PCE controller.

- Example IS-IS link-state distribution:

config t

router isis core

distribute link-state level 2

- Enable the link-state address family inside the BGP instance. Since I am enabling this on a Route-Reflector with a CLIENTS group, I will also add the family to the neighbor group. It’s important to add the address-family to the main BGP configuration otherwise the commit will fail. This family will also be added to the remote clients peering with this group.

config t

router bgp 65000

address-family link-state link-state

exit

neighbor-group CLIENTS

address-family link-state link-state

commit

- Enable XTC On the virtualized IOSXR Route Reflectors with state-sync to neighboring RR. I chose to use a RR for the sake of resources and ease to start as all BGP-LS information is readily available within this device.

pce

address ipv4 {{loopback0}}

logging no-path

!

state-sync ipv4 172.16.0.201

segment-routing

!

end

Verify there is BGP LS information available at the RRs posing as the XTC PCE Server. This is a must before moving forward!

show bgp link-state link-state

Verify that link-state topology is available for the PCE. This command is being performed from the PCE/XR Traffic Controller (IOSXR RR)

show pce ipv4 topology

All of the hosts within our topology should be available in the link-state data. Visualizing the topology is easy, by piping the command in the following way:

RP/0/0/CPU0:AS65000_RR2#show pce ipv4 topology | include Host

Sat May 22 21:03:24.507 UTC

Host name: AS65000_P1

Host name: AS65000_PE1

Host name: AS65000_PE3

Host name: AS65000_P2

Host name: 1720.1610.0022

Host name: AS65000_P1

Host name: AS65000_RR1

Host name: AS65000_PE1

Host name: 1720.1610.0022

Host name: AS65000_P1

Host name: AS65000_PE3

Host name: AS65000_P1

Host name: AS65000_PE4

Host name: 1720.1610.0022

Host name: AS65000_PE1

Host name: AS65000_P2

Host name: AS65000_PE100

Host name: AS65000_PE4

Host name: AS65000_P2

Host name: AS65000_PE3

Host name: AS65000_RR1

Host name: AS65000_RR2

Host name: AS65000_PE100

Host name: 1720.1610.0022

Host name: AS65000_PE101

Host name: AS65000_PE101

Host name: AS65000_PE100

LSP Configuration (Inter-Domain LSP)

Lets create an example LSP from our node AS65000_PE1 to our FOREIGN ISIS DOMAIN 100, PE101.

We will provide the traffic-eng configuration within MPLS to use our PCE peer (RR2). We will source our connection from our Loopback0 address at PE1.

Before we begin, lets check the computed path is available at the PCE XTC. If the path is not available, there could be some BGP-LS data missing or further troubleshooting is necessary before continuing. If there is no path, the LSP will not form at the PE.

Our PE device does NOT have reachability into ISIS Domain 100. We can verify this by attempting to reach the Loopback0 at PE101:

RP/0/0/CPU0:AS65000_PE1#show ip int br

Sun May 23 16:32:25.952 UTC

Interface IP-Address Status Protocol Vrf-Name

Loopback0 172.16.0.11 Up Up default

<------omitted------>

RP/0/0/CPU0:AS65000_PE1#ping 172.16.100.101

Sun May 23 16:08:08.862 UTC

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 172.16.100.101, timeout is 2 seconds:

UUUUU

Success rate is 0 percent (0/5)

RP/0/0/CPU0:AS65000_PE1#

However, if we perform a path computation from the XTC device, which has a full view of the network topology, we should be able to see some results:

RP/0/0/CPU0:AS65000_RR2#sh pce ipv4 path source 172.16.0.11 destination 172.16.100.101

Sun May 23 16:32:47.661 UTC

Path:

----:

Hop0: 10.0.0.2

Hop1: 10.0.0.6

Hop2: 10.0.0.10

Hop3: 11.0.0.5

Okay, so our XTC/PCE Server has a path to build an LSP! Start by configuring MPLS Traffic Engineering to use the PCE server.

conf t

!

mpls traffic-eng

pce

peer source ipv4 172.16.0.11

peer ipv4 172.16.0.202

!

segment-routing

stateful-client

!

commit

end

Now, let’s configure the new tunnel interface to use IGP metrics across our topology with a path-option determined by the PCE/XTC.

conf t

!

interface tunnel-te101

ipv4 unnumbered Loopback0

autoroute destination 172.16.100.101

destination 172.16.100.101

path-selection

metric igp

!

path-option 1 dynamic pce address ipv4 172.16.0.202

!

end

The tunnel should now be built and established! Lets take a look at our tunnel details. Notice we are using the computed path from PCE! This path does not exists in our local route-table, particularly the last hop into unknown territory of ISIS Domain 100.

Name: tunnel-te101 Destination: 172.16.100.101 Ifhandle:0x1f0

Signalled-Name: AS65000_PE1_t101

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option 1, type dynamic pce 172.16.0.202 (Basis for Setup, path weight 220)

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 0 kbps CT0

Creation Time: Sun May 23 08:49:04 2021 (07:54:00 ago)

Config Parameters:

Bandwidth: 0 kbps (CT0) Priority: 7 7 Affinity: 0x0/0xffff

Metric Type: IGP (interface)

Path Selection:

Tiebreaker: Min-fill (default)

Hop-limit: disabled

Cost-limit: disabled

Path-invalidation timeout: 10000 msec (default), Action: Tear (default)

AutoRoute: disabled LockDown: disabled Policy class: not set

Forward class: 0 (default)

Forwarding-Adjacency: disabled

Autoroute Destinations: 1

Loadshare: 0 equal loadshares

Auto-bw: disabled

Fast Reroute: Disabled, Protection Desired: None

Path Protection: Not Enabled

BFD Fast Detection: Disabled

Reoptimization after affinity failure: Enabled

Soft Preemption: Disabled

History:

Tunnel has been up for: 07:45:13 (since Sun May 23 08:57:51 UTC 2021)

Current LSP:

Uptime: 07:45:13 (since Sun May 23 08:57:51 UTC 2021)

Path info (PCE computed path):

Hop0: 10.0.0.3

Hop1: 10.0.0.7

Hop2: 10.0.0.11

Hop3: 11.0.0.4

Displayed 1 (of 5) heads, 0 (of 0) midpoints, 0 (of 2) tails

Displayed 1 up, 0 down, 0 recovering, 0 recovered heads

The same tunnel creation has been applied from PE101 back towards PE1 from PE101.

Lets take a quick look at the tunnel configuration from PE101:

RP/0/0/CPU0:AS65000_PE101#show run interface tunnel-te11

Sun May 23 20:36:42.456 UTC

interface tunnel-te11

ipv4 unnumbered Loopback0

autoroute destination 172.16.0.11

destination 172.16.0.11

path-selection

metric igp

!

path-option 1 dynamic pce address ipv4 172.16.0.202

!

And finally, let’s validate our MPLS te tunnel has a valid LSP path from PE101 to PE1

RP/0/0/CPU0:AS65000_PE101#traceroute mpls traffic-eng tunnel-te 11

Sun May 23 20:44:30.904 UTC

Tracing MPLS TE Label Switched Path on tunnel-te11, timeout is 2 seconds

Codes: '!' - success, 'Q' - request not sent, '.' - timeout,

'L' - labeled output interface, 'B' - unlabeled output interface,

'D' - DS Map mismatch, 'F' - no FEC mapping, 'f' - FEC mismatch,

'M' - malformed request, 'm' - unsupported tlvs, 'N' - no rx label,

'P' - no rx intf label prot, 'p' - premature termination of LSP,

'R' - transit router, 'I' - unknown upstream index,

'X' - unknown return code, 'x' - return code 0

Type escape sequence to abort.

0 11.0.0.4 MRU 1500 [Labels: 24033 Exp: 0]

L 1 11.0.0.5 MRU 1500 [Labels: 24020 Exp: 0] 0 ms

. 2 *

. 3 *

! 4 10.0.0.2 30 ms

RP/0/0/CPU0:AS65000_PE101#

MPLS LSP From XTC with Segment Routing as Transport

This scenario is almost identical, but we take full advantage of Segment-Routing as the transport. We have already configured our MPLS Traffic-Engineering to contact the PCE server for Path-Computation and everything has been verified. Now, while creating our Tunnel Interfaces, our Path-Options will be Dynamic from PCE but with Segment Routing. Lets take a look at what a tunnel will look like from PE1 to PE4 within the same IS-IS Domain:

RP/0/0/CPU0:AS65000_PE1#show run interface tunnel-te10044

Sun May 23 20:45:28.282 UTC

interface tunnel-te10044

ipv4 unnumbered Loopback0

destination 172.16.0.44

path-selection

metric igp

!

path-option 1 dynamic pce address ipv4 172.16.0.202 segment-routing

!

Great, our tunnel is configured and operationally up. Now, let’s review the Tunnel details and review the Path Option using Segment-Routing and the PCE Computed path by the XTC.

RP/0/0/CPU0:AS65000_PE1#show mpls traffic-eng tunnels 10044

Sun May 23 20:46:25.638 UTC

Name: tunnel-te10044 Destination: 172.16.0.44 Ifhandle:0x1d0

Signalled-Name: AS65000_PE1_t10044

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option 1, (Segment-Routing) type dynamic pce 172.16.0.202 (Basis for Setup)

Reroute pending (Path-option inuse by the current LSP has been modified)

Bandwidth: 0 kbps (CT0) Priority: 7 7 Affinity: 0x0/0xffff

Metric Type: IGP (interface)

Path Selection:

Tiebreaker: Min-fill (default)

Protection: any (default)

Hop-limit: disabled

Cost-limit: disabled

Path-invalidation timeout: 10000 msec (default), Action: Tear (default)

Last PCALC Error [Reopt]: Sun May 23 08:42:33 2021

Info: failed to find path

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 0 kbps CT0

Creation Time: Sun May 23 08:38:54 2021 (12:07:31 ago)

Config Parameters:

Bandwidth: 0 kbps (CT0) Priority: 7 7 Affinity: 0x0/0xffff

Metric Type: IGP (interface)

Path Selection:

Tiebreaker: Min-fill (default)

Protection: any (default)

Hop-limit: disabled

Cost-limit: disabled

Path-invalidation timeout: 10000 msec (default), Action: Tear (default)

AutoRoute: disabled LockDown: disabled Policy class: not set

Forward class: 0 (default)

Forwarding-Adjacency: disabled

Autoroute Destinations: 0

Loadshare: 0 equal loadshares

Auto-bw: disabled

Path Protection: Not Enabled

BFD Fast Detection: Disabled

Reoptimization after affinity failure: Enabled

SRLG discovery: Disabled

History:

Tunnel has been up for: 12:05:04 (since Sun May 23 08:41:21 UTC 2021)

Current LSP:

Uptime: 12:05:04 (since Sun May 23 08:41:21 UTC 2021)

Reopt. LSP:

Last Failure:

LSP not signalled, has no S2Ls

Date/Time: Sun May 23 20:46:21 UTC 2021 [00:00:04 ago]

Path info (PCE computed path):

Hop0: 10.0.0.3

Hop1: 10.0.0.7

Hop2: 10.0.0.11

Displayed 1 (of 5) heads, 0 (of 0) midpoints, 0 (of 3) tails

Displayed 1 up, 0 down, 0 recovering, 0 recovered heads

RP/0/0/CPU0:AS65000_PE1#

Looks like everything is working! We could now deploy VPN services across this multi-domain network, as we have fully established a bi-directional LSP path from IS-IS Domain CORE and IS-IS Domain 100.

Troubleshooting commands

- show mpls traffic-eng pce peer

- Validate the session establishment with the XR Traffic Controller.

- show mpls traffic-eng tunnels {tunnel_name}

- View MPLS tunnel information in detail, such as PCE computated path.

- traceroute mpls traffic-eng tunnel-te {tunel id}

- MPLS traceroute

Summary

The power of the XR Controller to allow you dynamically create IP/MPLS or Segment-Routing Traffic Engineered tunnels is incredible. We have only scratched the surface, but this is enough to understand the concept of what XTC is providing for a large SP Network. There are some use-cases that have been shared by networks who are using this feature in production and it looks very promising. I can think of a specific merger in my career that we could have benefited greatly from this. As XTC has been fully implemented to be a feature of the IOSXR Family, it’s going to become incredibly easy to adopt in production networks. One thing to note is that BGP-LS is playing a KEY role in the success of XTC. Hopefully this has covered enough grounds for the SPAUTO exam and look forward to taking the exam and being prepared in this topic!

What’s Next?!

For my next blog, I will be using a similar use-case with XTC, but also automagically deploy a L3VPN or L2VPN with the help of an NSO instance! This will showcase how incredibly useful NSO can be when paired with XTC from the perspective of a Large Network Operator. Stay Tuned!