What a title to this post, right?

With the recent release of Nornir 3.0 – I wanted to explore the capabilities of Nornir and I already know, I will prob never use Ansible for Network Automation ever again.. 😉 However, the reason for this post is to give a high level overview of Nornir 3.0 and provide a guide to convert 2.x Nornir/Netconf scripts over to 3.0.

Some of the topics explored in this post will include but not limited to the following:

- Infrastructure as Code : (Jinja2 Template Rendering and YAML defined network state)

- Nornir 3.0.

- Installation

- Plugins

- Directory Structure

- NETCONF/YANG

- Netmiko

How to Follow Along

I’d recommed to download the code from my github and review the repo. Once you are familiar with the code you should be ready to start reading along.

CODE:

https://github.com/h4ndzdatm0ld/Norconf

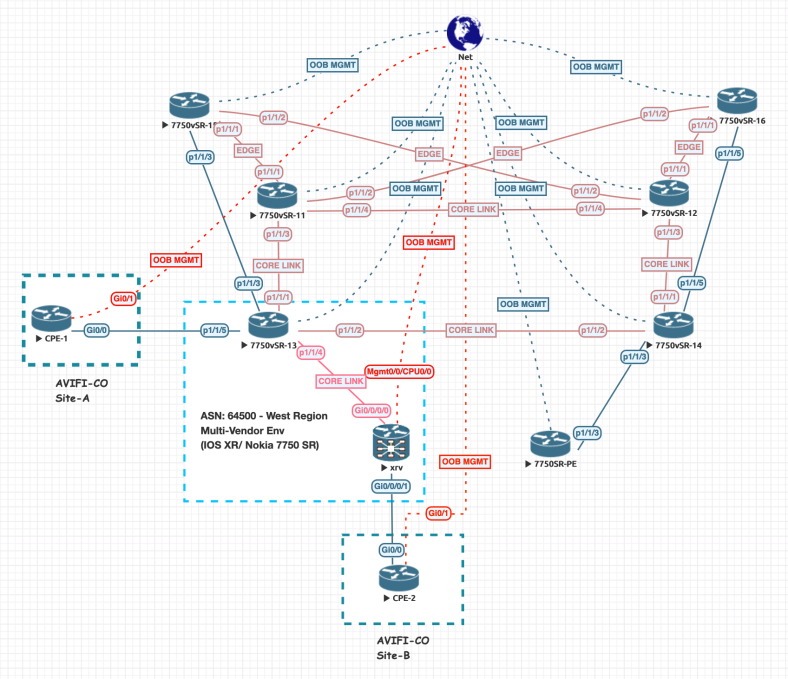

Review the topology below: You will become the operator of this network throughout this journey. The solution you are implementing will ease the workload of the deployment engineers and possibly save your company some money. Depending on the issue that XYZ company is trying to solve, it’s becoming clear that not every one requires a high dollar solution to automate their networks with vendor specific nms, orchestrators, etc.

For those of you new to Nornir, it’s an automation framework written in Python. If you are familiar with Ansible, you can adapt quite easily to Nornir as long as you know how to get around with python. You will quickly realize how flexible it is. One of my favorite features of Nornir is multithreading, allowing concurrent connections which in return makes this framework incredibly fast. We will discuss the topic of workers/threads a little more later in this post.

Getting Started

Begin by installing nornir with a simple pip3 install nornir

Lets discuss the directory structure. You can see here there is quite a bit going on..

NOTE: All of the following files/directories have to manually be created. These are not autocreated. Take a minute and re-create the folders/files under a chosen filepath. I started a git repo and this is where I created all my folders.

We’ve created a defaults, groups and hosts yml file under our ‘inventory‘ directory. We actually have a config.yml file which specifies the path location of these files . This config file is later passed into the nornir class that’s instantiated inside our python runbook, norconf.py. As always, our ‘templates‘ folder contains our Jinja2 files with the appropriate template files to render the configuration of the L3VPN and VPRNs for our multivendor environment. These are named according to their corresponding host platform and function.

. This config file is later passed into the nornir class that’s instantiated inside our python runbook, norconf.py. As always, our ‘templates‘ folder contains our Jinja2 files with the appropriate template files to render the configuration of the L3VPN and VPRNs for our multivendor environment. These are named according to their corresponding host platform and function.

Template Naming Example:

-

-

-

-

-

-

- {iosxr}-(platform) -{vrf}-(purpose)-.j2 (extension). The actual template is XML data following yang models. The reason to use the platform in the naming scheme, is to be able to use the host platform during our program execution and match the name of the file with the help of f strings. This is just one way to do it and you can find other ways that make more sense to your deployment.

-

-

-

-

-

Additional files in here, such as nc_tasks.py are adopted from Nick Russos project which uses nornir 2.X. He’s configured some custom netconf tasks at a time in which netconf was originally being introduced into Nornir. The Log file is self explanatory.

At the time of this writing, the nornir_netconf plugin is not yet available for Nornir 3.0 as a direct pip dowload/install. What I have done is a series of try/except and mostly failures to get this to work. I had to take a step back and understand a lot of what’s happening under the hood of nornir. I’ve cloned the REPO @ https://github.com/nornir-automation/nornir_netconf@first and tried to install it via Poetry, but this was mostly a huge waste of time and nothing worked, particularly with the plugin configuration of Nornir. I removed the installation and went the pip route straight from git.

I was able to install the the code by using pip + git using the following:

pip3 install git+https://github.com/nornir-automation/nornir_netconf@first

However, during the process I got an exception “AttributeError: module ‘enum’ has no attribute ‘IntFlag'” From some searching around, it’s due to a discrepency with using enum34. I ran the following to ensure the package was present and removed it.

pip freeze | grep enum34

➜ nornir_netconf-first pip3 freeze | grep enum34

enum34==1.1.10

Looks like I do have it in installed … A quick, ‘pip3 uninstall enum34’ and re-ran the original pip3 install from git+git_page and the installation was successfull. I wonder what I broke by removing enum34 😉

Installing collected packages: nornir-netconf

Successfully installed nornir-netconf-1.0.0Python 3.8.2 (v3.8.2:7b3ab5921f, Feb 24 2020, 17:52:18)

[Clang 6.0 (clang-600.0.57)] on darwin

Type “help”, “copyright”, “credits” or “license” for more information.

>>> import nornir_netconf

>>> print(SO FAR SO GOOD!)

I was having an issue with nornir netconf plugin originally and had to investigate how to manually register a plugin. That is before I found out how to get around the hurdle and install via git+pip. Here is the code I used to manually register the plugin in my runbook directly, in case anyone ever wants to register a new plugin..although a lot has to happen for any of this to work.

from nornir.core.plugins.connections import ConnectionPluginRegisterfrom nornir_netconf.plugins.connections import NetconfConnectionPluginRegister.register(“netconf”, ConnectionPluginRegister)

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| def netconf_edit_config(task, target, config, **kwargs): | |

| """ | |

| Nornir task to issue a NETCONF edit_config RPC with optional keyword | |

| arguments. Both the target and config arguments must be specified. | |

| """ | |

| conn = task.host.get_connection("netconf", task.nornir.config) | |

| result = conn.edit_config(target=target, config=config, **kwargs) | |

| return Result(host=task.host, result=result) |

Importing this function, I am actually able to receive this rpc_reply from a successfull RPC operation. This is critical to the operation of my script – as I write conditional statements depending on the returning output of the tasks.run result.

<?xml version=”1.0″?>

<rpc-reply message-id=”urn:uuid:28f57844-94cb-4ecc-b927-ba1f5318eab7″ xmlns:nc=”urn:ietf:params:xml:ns:netconf:base:1.0″ xmlns=”urn:ietf:params:xml:ns:netconf:base:1.0″><ok/>

</rpc-reply>

The Host File:

—R3_CSR:hostname: 192.168.0.223groups:– CSRR3_SROS_PE:hostname: 192.168.0.222groups:– NOKIAdata:region: west-regionR8_IOSXR_PE:hostname: 192.168.0.182groups:– IOSXRdata:region: west-region

The Group File

- The data.target key is inherited and called upon during the execution of rpc-edit config to point the operation against the correct netconf data store)

- These connection options can make or break the process

The Config File

- A config.yaml file must specify the location of the hosts, groups and defaults fiiles.

Threads

We have specified 100 num_workers, which really means we can have up to 100 concurrent multithreaded sessions to devices. The way I think about Nornir running process is everything you’re doing is in a giant ‘for loop’. The tasks runs through all the devices in the inventory (unless you specify a filter) one by one. Although there isn’t a for statement written anywhere visible, you’re looping through all the devices in your inventory. However, using threads you’re actually doing this is parallel. You could technically specify the ‘plugin: serial’ and not take advantage of threads.

Plugins

pip3 install nornir_utilspip3 install nornir_netmikopip3 install nornir_jinja2

Run Book (Python Script of Compiled ‘tasks’)

from nornir import InitNornirfrom nornir_netmiko.tasks import netmiko_send_commandfrom nornir_utils.plugins.functions import print_resultfrom nornir_utils.plugins.tasks.data import load_yamlfrom nornir_jinja2.plugins.tasks import template_filefrom nornir_netconf.plugins.tasks import netconf_edit_configfrom nc_tasks import netconf_edit_config, netconf_commitimport xmltodict, json, pprint__author__ = ‘Hugo Tinoco’__email__ = ‘hugotinoco@icloud.com’# Specify a custom config yaml file.nr = InitNornir(‘config.yml‘)

Filters

What are filters and how do we create them? A filter is a selection of hosts in which you want to execute a runbook against. For our main example in this post, we are an operator who is in charge of deploying a L3VPN/VPRN in a multi-vendor environemnt at the core. This will include Nokia SR 7750 and Cisco IOSxR. However, our hosts file contains ALL of our devices that are available in our network. The L3VPN we are deploying is only spanning across our ‘west-region’ pictured on the bottom left of the topology above. There are two CPE’s, one attached to the Nokia 7750 and one to the Cisco IOSxR. In order to deploy this service, we want to specify within Nornir that we only need to execute the tasks against these two specific routers. The rest of the network doesn’t need to know about this service. Below is a snippet of the ‘hosts.yml’ file which has customized region key and west-region item. You can see this is duplicated to the R8_IOSXR_PE device. That’s it! We’ve identified common ground between these devices, being in the ‘west-region’ of our network.

R3_SROS_PE:hostname: 192.168.0.222groups:– NOKIAdata:region: west-regionR8_IOSXR_PE:hostname: 192.168.0.182groups:– IOSXRdata:region: west-region

Now lets write some code to ensure nornir knows this is a filter.

# Filter the hosts by the ‘west-region’ site key.west_region = nr.filter(region=’west-region‘)

Infrastructure as Code

We’ll be extracting information from our Yaml files which are variables inputted by the user along side our Jinja2 templates consisting of our Yang Models. We use Jinja2 to distribute the correct variables across our yang models for proper rendering. For distributing the configurations via NETCONF across our core network we enlist the help of Nornir to manage all of theses tasks. We’re allowing Nornir to handle the flow and procedures to ensure proper deployment.

VARS

Below is the yaml file containing our vars which will be utilized to render the j2 template. The following is for the Nokia platform:

—VRF:– SERVICE_NAME: AVIFISERVICE_ID: ‘100’CUSTOMER_ID: 200CUSTOMER_NAME: AVIFI-CODESCRIPTION: AVIFI-COASN: ‘64500’RD: 100RT: 100INTERFACE_NAME: TEST-LOOPBACKINTERFACE_ADDRESS: 3.3.3.3INTERFACE_PREFIX: 32

Jinja 2 – yang:sr:conf

There are so many important pieces to construct this automation project. The J2 template file, must include everything that is necessary to create this service. Below is the example for the Nokia device. Please see my code via the github repo at the top of this document to review the IOSxR J2 Template file. There are also supporting documents at the end of this document if you need more information on Jinja2

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| {% for VRF in data %} | |

| <config> | |

| <configure xmlns="urn:nokia.com:sros:ns:yang:sr:conf"> | |

| <service> | |

| <customer> | |

| <customer-name>{{VRF.CUSTOMER_NAME}}</customer-name> | |

| <customer-id>{{VRF.CUSTOMER_ID}}</customer-id> | |

| </customer> | |

| <vprn> | |

| <service-name>{{VRF.SERVICE_NAME}}</service-name> | |

| <service-id>{{VRF.SERVICE_ID}}</service-id> | |

| <admin-state>enable</admin-state> | |

| <customer>{{VRF.CUSTOMER_NAME}}</customer> | |

| <autonomous-system>{{VRF.ASN}}</autonomous-system> | |

| <route-distinguisher>{{VRF.ASN}}:{{VRF.RD}}</route-distinguisher> | |

| <vrf-target> | |

| <community>target:{{VRF.ASN}}:{{VRF.RT}}</community> | |

| </vrf-target> | |

| <auto-bind-tunnel> | |

| <resolution>any</resolution> | |

| </auto-bind-tunnel> | |

| <interface> | |

| <interface-name>{{VRF.INTERFACE_NAME}}</interface-name> | |

| <loopback>true</loopback> | |

| <ipv4> | |

| <primary> | |

| <address>{{VRF.INTERFACE_ADDRESS}}</address> | |

| <prefix-length>{{VRF.INTERFACE_PREFIX}}</prefix-length> | |

| </primary> | |

| </ipv4> | |

| </interface> | |

| </vprn> | |

| </service> | |

| </configure> | |

| </config> | |

| {% endfor %} |

Runbook Walkthrough

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| def get_vrfcli(task, servicename): | |

| ''' Retrieve VRF from IOSXR | |

| ''' | |

| vrf = task.run(netmiko_send_command, command_string=f"sh vrf {servicename} detail") | |

| print_result(vrf) | |

| def get_vprncli(task, servicename): | |

| ''' Retrieve VPRN from Nokia | |

| ''' | |

| vprn = task.run(netmiko_send_command, command_string=f"show service id {servicename} base") | |

| print_result(vprn) | |

| def cli_stats(task, **kwargs): | |

| ''' Revert to CLI scraping automation to retrieve simple show commands and verify status of Services / L3 connectivity. | |

| ''' | |

| # Load Yaml file to extract specific vars | |

| vars_yaml = f"vars/{task.host}.yml" | |

| vars_data = task.run(task=load_yaml, file=vars_yaml) | |

| # Capture the Service Name: | |

| servicename = vars_data.result['VRF'][0]['SERVICE_NAME'] | |

| if task.host.platform == 'alcatel_sros': | |

| get_vprncli(task, servicename) | |

| elif task.host.platform == 'iosxr': | |

| get_vrfcli(task, servicename) | |

| else: | |

| print(f"{task.host.platform} Not supported in this runbook") |

Our overall goal is to deploy the VPRN/L3VPN. We start by creating a few custom functions.

We create get_vrfcli and get_vprncli. These two functions take advantage of netmiko_send_command plugin and are using platform specific cli commands. We will use these two commands to retrieve the service status. Then we take the two functions and wrap them inside cli_stats. We load the yaml file using the load_yaml plugin from Nornir. Once the task is executed, we drill into our vars file and extract the service name as a variable from our loaded dictionary (yaml file). This variable is then passed into the get_vrpncli/get_vrfcli functions to execute against our devices. At this point, if we execute the cli_stats tasks against our west-region, we can use conditional statements to execute the correct command against the correct platform device. The way in which we access the platform, is by simply digging into the task.host.platform key. This will return the value of the key.

NOTE:

I am working on a video tutorial and demonstration of Nornir 3.0. During the video, I will create additional tasks in which verify the L3 Connectivity via simple ping commands.

Bulk of the Code:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| def iac_render(task): | |

| ''' Load the YAML vars and render the Jinja2 Templates. Deploy L3VPN/VPRN via NETCONF. | |

| ''' | |

| # Load Yaml Files by Hostname | |

| vars_yaml = f"vars/{task.host}.yml" | |

| vars_data = task.run(task=load_yaml, file=vars_yaml) | |

| # With the YAML variables loaded, render the Jinja2 Template with the previous function: iac_render. | |

| template= f"{task.host.platform}-vrf.j2" | |

| vprn = task.run(task=template_file, path='templates/', template=template, data=vars_data.result['VRF']) | |

| # Convert the generated template into a string. | |

| payload = str(vprn.result) | |

| # Extract the custom data.target attribute passed into the group of hosts to specifcy 'candidate' target | |

| # configuration store to run the edit_config rpc against on our task.device. | |

| # IOSXR/Nokia take advantage of candidate/lock via netconf. | |

| deploy_config = task.run(task=netconf_edit_config, target=task.host['target'], config=payload) | |

| # # Extract the new Service ID Created: | |

| if task.host.platform == 'alcatel_sros': | |

| for vrf in vars_data.result['VRF']: | |

| serviceid = vrf['SERVICE_ID'] | |

| servicename = vrf['SERVICE_NAME'] | |

| # Ensure the customer – id is always interpreted as a string: | |

| customer = vrf['CUSTOMER_ID'] | |

| customerid = str(customer) | |

| if task.host.platform == 'iosxr': | |

| for vrf in vars_data.result['VRF']: | |

| servicename = vrf['SERVICE_NAME'] | |

| serviceid = None | |

| rpcreply = deploy_config.result | |

| # if 'ok' in result: | |

| if rpcreply.ok: | |

| print(f"NETCONF RPC = OK. Committing Changes:: {task.host.platform}") | |

| task.run(task=netconf_commit) | |

| # Validate service on the 7750. | |

| if task.host.platform == 'alcatel_sros': | |

| nc_getvprn(task, serviceid=serviceid, servicename=servicename, customerid=customerid) | |

| elif task.host.platform =='iosxr': | |

| pass | |

| # Duplicate the getvprn function but for iosxr | |

| elif rpcreply != rpcreply.ok: | |

| print(rpcreply) | |

| else: | |

| print(f"NETCONF Error. {rpcreply}") |

Lets review the iac_render function. We simply load our yaml vars and render our j2 templates. Special attention to the following:

| template= f”{task.host.platform}-vrf.j2″ |

| This allows us to properly select a template that matches the host during the execution of the task. Using our f-strings, we pass in the task.host.platform and append the ‘-vrf’ which in turn matches the name of our stored xml templates inside our templates directory. Example: “iosxr-vrf.j2” |

At this point we have our payload to deploy against our devices. One thing to note, the result of the rendered template using the nornir plugin, template_file is a Nornir Class. Make sure this gets converted to a str: “payload = str(vprn.result)”. We will pass this into our netconf_edit_config task as the payload to deploy via netconf.

deploy_config = task.run(task=netconf_edit_config, target=task.host[‘target’], config=payload)

Lets examine this line of code. We assign ‘deploy_config’ as the variable for the returning output of our task. The task we will execute is the ‘netconf_edit_config‘ function. Again, this is a wrapper of ncclient, which I hope you’re familiar with – if not, please give it a google search or review the additional resources at the bottom of the doc.

Now, the’ target=task.host[‘target’]’ is the data store to use during our rpc NETCONF call. We specified this for our host inside our groups file. See below:

NOKIA:

username: ‘admin’

password: ‘admin’

platform: alcatel_sros

port: 22

data:

target: candidate

NETCONF has three data stores in which we can execute configuration changes against.

- Running

- Startup

- Candidate

In my opinion, candidate is the most valuable operation. We are able to input a config change, validate and once we are sure of the changes we must commit the change. As the operator of this network, we must be sure not to cause any outages or create any rippling effects from our automation. We will insepct the RPC reply and ensure all is good and if so, we will commmit out changes for the customer.

Line 23, has a conditional statement where we dig into the actual platform of the hosts that’s running within our task. We simply compare it to alcatel_sros or iosxr, as those are our two core devices in this example. We extract a couple different items in the result of our loaded yaml file which we will use to return some output to the screen and provide results in a readable format. We do the same with our iosxr results.

At this point, the netconf_edit_config wrapper for ncclient should have executed the netconf rpc and editted the configuration.

We store the reply in a variable called rpcreply, by extracting the .result attribute out of our original deploy_config variable. This gives us the xml reply and we can check the result of the reply by using ‘if rpcreply.ok:’

Line 42 gives us a simple feedback to let us know the result has returned OK. We now run the netconf_commit task and confirm the change.

Finally, lets validate some of the services applied and use our custom function ‘nc_getvprn’ against our Nokia 7750.

nc_getvprn(task, serviceid=serviceid, servicename=servicename, customerid=customerid)

Earlier, we extrracted some vars from our yaml file and loaded them into script as the following: ‘serviceid’, ‘servicename’ and ‘customerid’. We use these variables to execute the task and get some information by parsing the result of the netconf_get_config rpc call. We process this information by using xmltodict.parse and converting the xml to a Python dictionary. We compare the values found inside our running configuration against the desired state of our network element. Infrastructue as code is fun right? Once we do some comparasion of our items, we return meaningful output to the screen to let us, the operator know that everything is configured as expected.

If you are not familiar with xmltodict, I will provide additional references at the bottom of this document.

At the time of this writing I only have completed the compliance check against the Nokia sros device. I will most likley be extending this code to do the same against the IOSxR device. Below is the customer ‘nc_getvprn” function which we just described.

Execution

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| def main(): | |

| west_region.run(task=iac_render) | |

| west_region.run(task=cli_stats) | |

| if __name__=="__main__": | |

| main() |

It’s that easy. We run our tasks against our filter, west_region to narrow down our hosts for our multi-vendor environment. Lets review the output!

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| ➜ Norconf git:(master) ✗ python3 norconf.py | |

| NETCONF RPC = OK. Committing Changes:: alcatel_sros | |

| NETCONF RPC = OK. Committing Changes:: iosxr | |

| SR Customer: AVIFI-CO | |

| SR Customer ID: 200 | |

| SR Service Name: AVIFI | |

| vvvv netmiko_send_command ** changed : False vvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvv INFO | |

| =============================================================================== | |

| Service Basic Information | |

| =============================================================================== | |

| Service Id : 100 Vpn Id : 0 | |

| Service Type : VPRN | |

| MACSec enabled : no | |

| Name : AVIFI | |

| Description : (Not Specified) | |

| Customer Id : 200 Creation Origin : manual | |

| Last Status Change: 09/18/2020 08:56:02 | |

| Last Mgmt Change : 09/18/2020 08:56:02 | |

| Admin State : Up Oper State : Up | |

| Router Oper State : Up | |

| Route Dist. : 64500:100 VPRN Type : regular | |

| Oper Route Dist : 64500:100 | |

| Oper RD Type : configured | |

| AS Number : 64500 Router Id : 10.10.10.3 | |

| ECMP : Enabled ECMP Max Routes : 1 | |

| Max IPv4 Routes : No Limit | |

| Auto Bind Tunnel | |

| Resolution : any | |

| Weighted ECMP : Disabled ECMP Max Routes : 1 | |

| Max IPv6 Routes : No Limit | |

| Ignore NH Metric : Disabled | |

| Hash Label : Disabled | |

| Entropy Label : Disabled | |

| Vrf Target : target:64500:100 | |

| Vrf Import : None | |

| Vrf Export : None | |

| MVPN Vrf Target : None | |

| MVPN Vrf Import : None | |

| MVPN Vrf Export : None | |

| Car. Sup C-VPN : Disabled | |

| Label mode : vrf | |

| BGP VPN Backup : Disabled | |

| BGP Export Inactv : Disabled | |

| LOG all events : Disabled | |

| SAP Count : 0 SDP Bind Count : 0 | |

| VSD Domain : <none> | |

| ——————————————————————————- | |

| Service Access & Destination Points | |

| ——————————————————————————- | |

| Identifier Type AdmMTU OprMTU Adm Opr | |

| ——————————————————————————- | |

| No Matching Entries | |

| =============================================================================== | |

| ^^^^ END netmiko_send_command ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ | |

| vvvv netmiko_send_command ** changed : False vvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvv INFO | |

| Thu Sep 24 09:10:56.099 UTC | |

| VRF AVIFI; RD 64500:100; VPN ID not set | |

| VRF mode: Regular | |

| Description AVIFI-CO | |

| Interfaces: | |

| Loopback100 | |

| Loopback200 | |

| Address family IPV4 Unicast | |

| Import VPN route-target communities: | |

| RT:64500:100 | |

| Export VPN route-target communities: | |

| RT:64500:100 | |

| No import route policy | |

| No export route policy | |

| Address family IPV6 Unicast | |

| No import VPN route-target communities | |

| No export VPN route-target communities | |

| No import route policy | |

| No export route policy |

From the output above, we deployed our L3VPN (IOSxR) and VPRN (NOKIA) device. After we take full advantage of our IaC+Nornir, we return back to our CLI Scraping automation and rely on Netmiko to run simple show commands to view the VRF is actually present and validate the services.